This Workspace was specifically created for the Working with Large Language Models event hosted by Women in Data (WiD) in collaboration with DataCamp.

Some of the examples were taken from the courses, Working with the OpenAI API, which is available on DataCamp, and Introduction to LLMs in Python, which will be released in December, 2023.

Workflow for Working with an AI API (Closed-Source)

For many use cases, especially those looking to get a POC off the ground ASAP, an AI API can be a great low-setup solution.

Installing the Latest Version of the openai Library

openai LibraryThere were some major updates to the library when v1 was released on November 6, so we'll install the latest version.

# If something breaks: !pip install --force-reinstall "openai==1.3.4" --quiet

!pip install openai --upgrade --quiet --no-warn-script-location

import openai

print(f"openai version: {openai.__version__}")Creating an API Key

To create a key, you'll first need to create an OpenAI account by visiting their signup page. Next, navigate to the API keys page to create your secret key.

Note: OpenAI sometimes provides free credits for the API, but this can differ depending on geography. You may also need to add debit/credit card details.

Best Practices for Using API Keys

-

Rule 1: Don't expose your key in client-side code! Using a key directly in scripts or applications can result in it being inadvertently stored, which places it at risk of being used by malicious actors. Instead, store them as environment variables on your system that you can access from your script.

-

Rule 2: See Rule 1!

from .... import ....

# Search env variables for OPENAI_API_KEY by default

# If a different variable name is used, use OpenAI(api_key=os.environ["VAR_NAME"])

client = ....

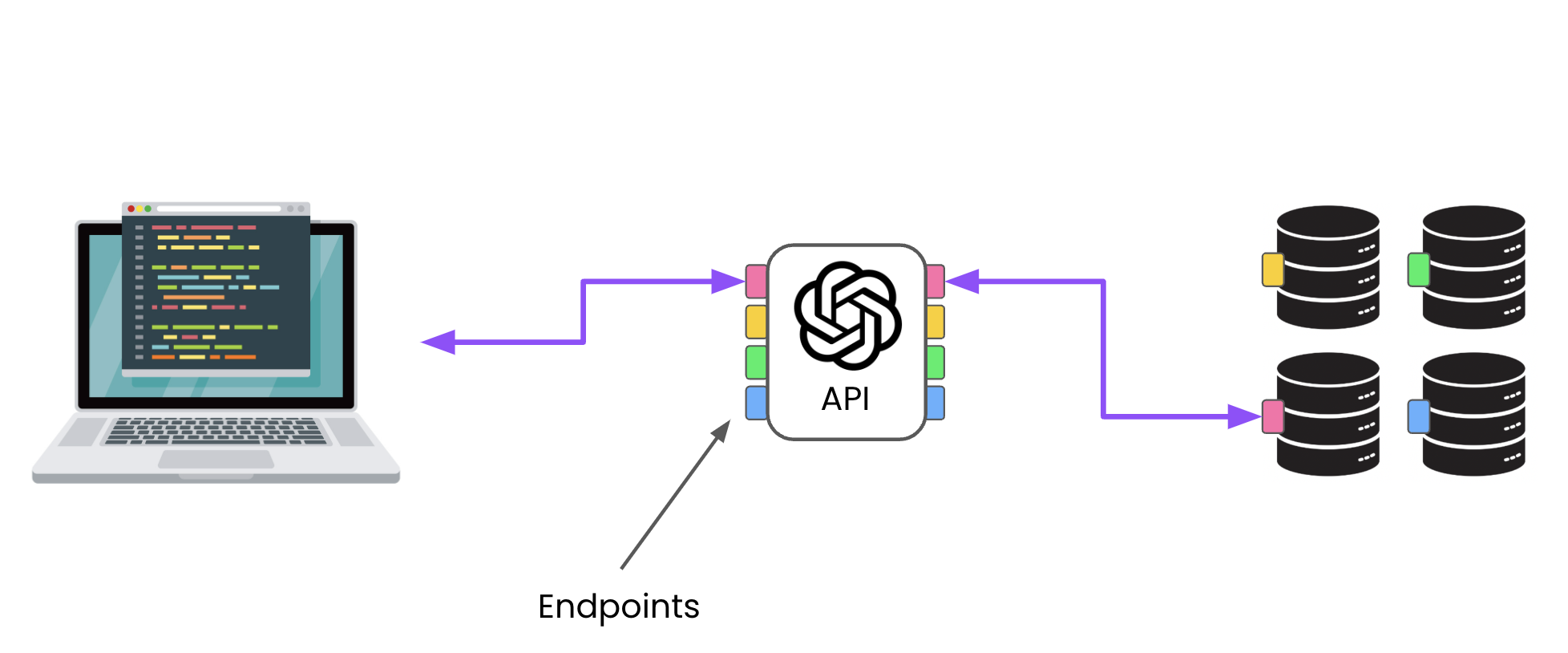

API Endpoints

API endpoints are access points to particular services. To access OpenAI's GPT models, we'll be using the Chat endpoint, but they also provide other endpoints, including:

- Audio: transcribe and translate audio using the Whisper model

- Embeddings: encode text to for easier processing by other models

- Fine-tuning: tailor a model to your own data

- Images: generate images from a text prompt with DALL·E 3

- Moderations: detect content that violates OpenAI's terms of use

- others...

Chat Completion for Q&A

When provided with a question, the model will use the data it was trained on to generate a response.

BEWARE: LLMs have a tendency to sometimes hallucinate, which is the phenomena where they state incorrect information as though it is correct. Halluncination often occurs when you approach the limits of the model's knowledge, e.g., asking questions that require going beyond its knowledge or abilities.

chat_completion = ....

print(chat_completion)Solution

Text Summarization

One of the clearest use cases for LLMs is in summarization. In this task, GPT-3.5 captures the key information from the text and summarizes it based on any requirements that we may have.

text_sample = "The vision of OpenAI is to ensure that artificial general intelligence (AGI) benefits all of humanity. AGI refers to highly autonomous systems that outperform humans at most economically valuable work. OpenAI aims to build safe and beneficial AGI directly, but it is also committed to aiding others in achieving this outcome by actively cooperating with any value-aligned and safety-conscious project that comes closer to building AGI. OpenAI seeks to distribute the benefits of AGI broadly and prevent any harmful uses or concentration of power. They prioritize long-term safety, conduct research to make AGI safe, and drive the adoption of such research across the AI community. OpenAI is committed to democratic values, cooperation with other organizations, and actively cooperating with global partners to address AGI’s global challenges."

chat_completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "user",

"content": ....,

}

]

)

print(chat_completion.choices[0].message.content)Solution